Deep 3D Perception of People and Their Mobility Aids

This website presents our work about detecting people and characterizing them according to the mobility aids they use. It also provides our annotated dataset that contains five classes: pedestrian, person in wheelchair, pedestrian pushing a person in a wheelchair, person using crutches and person using a walking frame.

Publications

-

Marina Kollmitz, Andreas Eitel, Andres Vasquez, Wolfram Burgard

Deep 3D perception of people and their mobility aids

Robotics and Autonomous Systems (RAS), Vol. 114, 2019

DOI BibTeX Manuscript -

Andres Vasquez, Marina Kollmitz, Andreas Eitel, Wolfram Burgard

Deep Detection of People and their Mobility Aids for a Hospital Robot

IEEE European Conference on Mobile Robots (ECMR), Paris, France, 2017

DOI BibTeX Manuscript

Videos

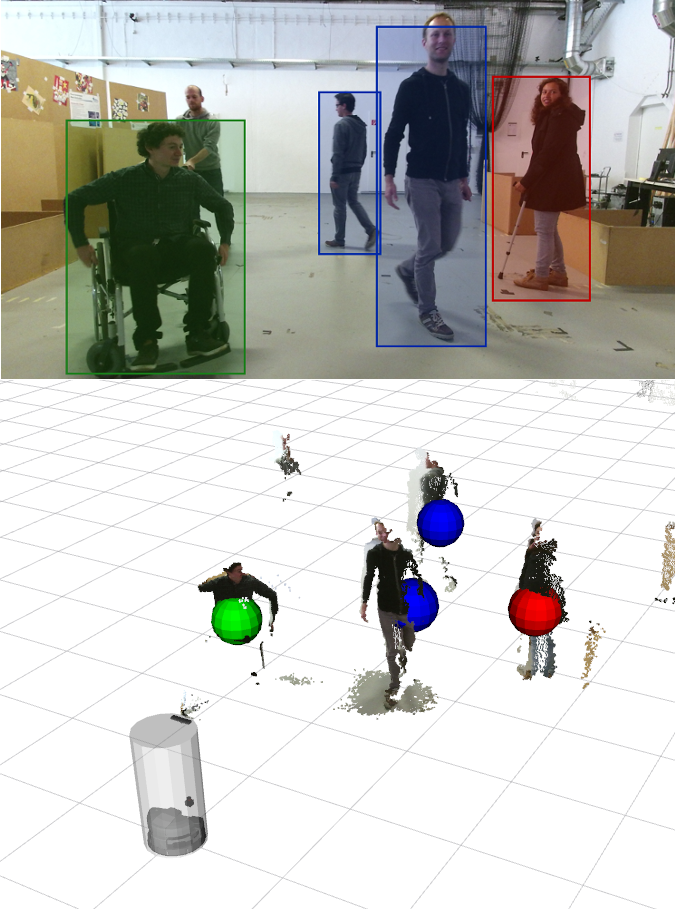

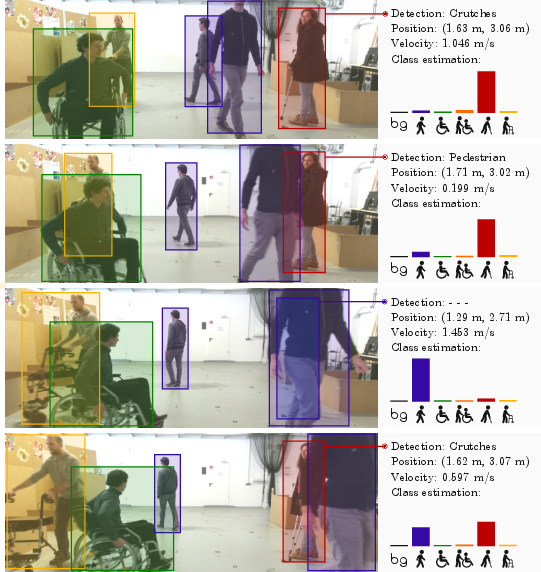

This video shows the 2D bounding box detection as well as the 3D centroid regression performance of our system on an RGB image stream.

Here we show a real-world experiment with our robot Canny, guiding visitors to the professor's office. If the robot perceives that the person is using a walking aid, it guides them to the elevator. Pedestrians are guided to the stairs.

This video shows the performance of our pipeline presented at ECMR 2017 on our MobilityAids dataset and explains some of the underlying concepts.

MobilityAids Code

The mobilityaids code for our 2019 RAS paper is available on GitHub:

- ROS people detector node: mobilityaids_detector

- adapted Detectron code: DetectronDistance

The code for our 2017 ECMR paper is available is also on GitHub:

- ROS people detector node: hospital_people_detector

- adapted Fast R-CNN code: fast-rcnn-mobility-aids

MobilityAids Dataset

We collected a hospital dataset with over 17'000 annotated RGB-D images, containing people categorized according to the mobility aids they use: pedestrians, people in wheelchairs, people in wheelchairs with people pushing them, people with crutches and people using walking frames. The images were collected in the facilities of the Faculty of Engineering of the University of Freiburg and in a hospital in Frankfurt.

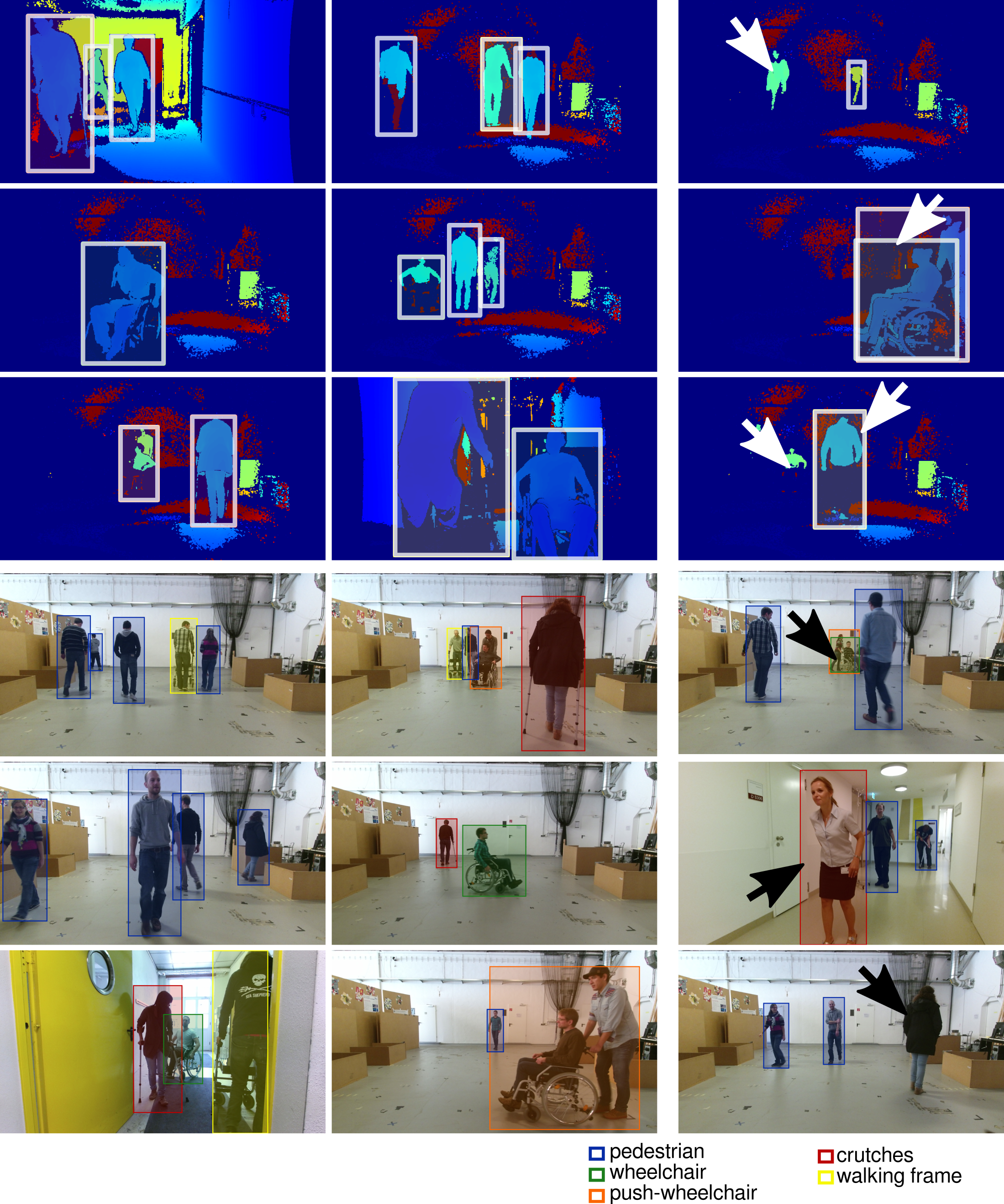

The following image shows example frames of the dataset. On the right, we show successful classifications of our pipeline. On the left, you can see some failure cases with our approach. The top images are DepthJet examples, the bottom images RGB examples.

Mobility Aids Dataset Download

This dataset is provided for research purposes only. Any commercial use is prohibited. If you use the dataset please cite our paper:

@INPROCEEDINGS{vasquez17ecmr,

author = {Andres Vasquez and Marina Kollmitz and Andreas Eitel and Wolfram Burgard},

title = {Deep Detection of People and their Mobility Aids for a Hospital Robot},

booktitle = {Proc.~of the IEEE Eur.~Conf.~on Mobile Robotics (ECMR)},

year = {2017},

doi = {10.1109/ECMR.2017.8098665},

url = {http://ais.informatik.uni-freiburg.de/publications/papers/vasquez17ecmr.pdf}

}

|

Download depth images 960x540 3.8GB

Download depth-jet images 960x540 1.5GB

Download annotations for RGB

Download annotations for RGB test set 2 (occlusions)

Download annotations for depth

Download annotations for depth test set 2 (occlusions)

Download robot odometry for test set 2

Download image set textfiles

Download camera calibration

Download static base-to-camera tranformation

Download README file

The following annotations are taken from the InOutDoorPeople dataset, seq. 0-2, and extended by centroid depth labels. They are not part of our Mobility Aids Dataset, but we used them in addition to the Mobility Aids examples for training our models.

Download InOutDoor training annotations for DepthJet

Download InOutDoor training annotations for RGB